Robots for training carers, sensors that monitor people as they sleep, and an app that can detect if someone is in pain – could this be the future of care?

The care sector is increasingly using technology and Artificial Intelligence (AI) to look after the UK’s ever-growing elderly population.

Potential Benefits and Current Applications

Despite the advancements, experts have urged people to consider the potential risks of an overreliance on AI in the industry. “AI can only be part of the solution but not the whole solution,” says Dr Caroline Green from the University of Oxford.

Thomas Tredinnick, the boss of AllyCares, shares how his company uses sensors to monitor care home residents’ rooms overnight, listening out for anything out of the ordinary. This technology sends an audio recording of any incidents to carers on shift, allowing them to decide how to act. Mr Tredinnick claims it saves staff having to perform regular nightly checks, meaning residents can get a better sleep, and has helped reduce preventable health events leading to hospital admissions by spotting things like falls and chest infections before they escalate.

Christine Herbert shared her experience with the technology being used for her 99-year-old mother Betty. Initially uncertain, she was convinced after the home presented data showing how her mother was monitored without disturbance during the night.

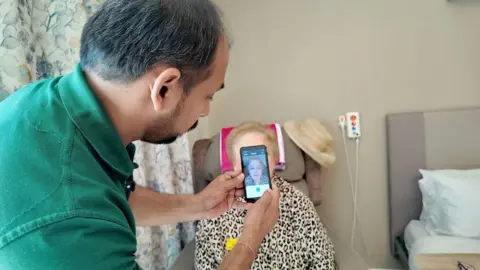

At Elmbrook Court care home in Wantage, Oxfordshire, they are using an AI-powered smartphone app called Painchek to identify pain in residents, particularly those who are non-verbal. Carers scan a resident’s face for indicators and answer questions to receive an instant percentage score indicating the level of pain. Aislinn Mullee, deputy manager, stated it has made a “huge difference” in assessing pain medication needs in collaboration with GPs and reassuring families.

Ethical Considerations and Risks

Dr Green, director of research at the Institute for Ethics in AI, highlighted how AI systems could be susceptible to biases, amplifying discrimination and stereotyping. She also recognised worries around sharing personal data. With no official government policy on the use of AI in social care yet, Dr Green stresses the importance of how policy shapes up, ensuring people retain choices, including the option to opt out of AI in their care.

Professor Lee-Ann Fenge, professor of social care at Bournemouth University, echoes this caution, stating new technology should enhance, not replace, the work already happening. She added that time is needed to “think about some of the ethical challenges” of monitoring people.

Training and Future Developments

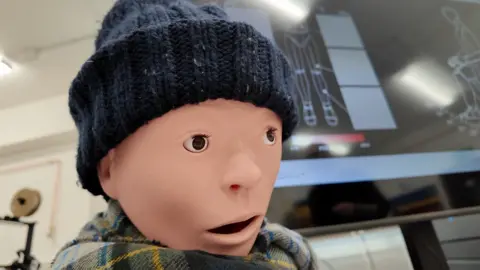

At the University of Oxford’s Robotics Institute, Dr Marco Pontin and his team are exploring AI for training carers. They have created a robot that reacts to human touch and can be programmed to feel pain, flinching if touched too forcefully. This robot will be trialled with occupational therapy students, acting as a “digital twin” of real patients to allow carers to replicate real-world scenarios.

Challenges in the Care Sector

The care sector faces significant challenges, including a growing ageing population and a reliance on overseas workers, with recent statistics showing a sharp fall in visas issued for health and social care workers. Professor Lee-Ann Fenge states, “We’re reliant on migrant workers to keep social care going at the moment,” reinforcing her view that technology should not be used simply to fill these gaps.

Government Approach and Expert Recommendations

The UK government recently announced a “test and learn” approach to funding AI in the public sector. However, Dr Caroline Green continues to promote a cautious approach to AI in care. She believes it can help with administrative and operational tasks but cannot replace the human touch. She warns against viewing AI as a “panacea” for staffing shortages and growing demand, emphasising the continued need to invest in people and professional carers.

A Department of Health and Social Care (DHSC) spokesperson highlighted the government’s commitment to harnessing technology to transform social care, citing examples like AI-powered fall detection and tools that automate paperwork to free up staff time. They stated that making better use of AI aligns with their 10 Year Health Plan, which aims to shift towards prevention, community care, and digital solutions.

While AI presents promising opportunities to enhance social care through innovative tools for monitoring, pain detection, and training, experts stress that it should be viewed as a supplementary tool, not a replacement for human interaction and care. Careful consideration of ethical implications, data privacy, and the preservation of patient choice is crucial as the sector navigates the increasing integration of AI.

Leave a Reply